Bert Is a State of the Art Sentence Embedding Model Recently Released by Google

BERT Explained: State of the art language model for NLP

BERT (Bidirectional Encoder Representations from Transformers) is a contempo newspaper published by researchers at Google AI Language. It has caused a stir in the Auto Learning customs by presenting state-of-the-fine art results in a wide variety of NLP tasks, including Question Answering (Team v1.1), Tongue Inference (MNLI), and others.

BERT'southward key technical innovation is applying the bidirectional preparation of Transformer, a popular attention model, to language modelling. This is in contrast to previous efforts which looked at a text sequence either from left to right or combined left-to-correct and right-to-left preparation. The paper's results show that a linguistic communication model which is bidirectionally trained can accept a deeper sense of language context and flow than single-direction linguistic communication models. In the paper, the researchers detail a novel technique named Masked LM (MLM) which allows bidirectional training in models in which information technology was previously impossible.

Background

In the field of calculator vision, researchers have repeatedly shown the value of transfer learning — pre-training a neural network model on a known job, for case ImageNet, and and so performing fine-tuning — using the trained neural network as the ground of a new purpose-specific model. In recent years, researchers have been showing that a similar technique tin be useful in many tongue tasks.

A unlike approach, which is also popular in NLP tasks and exemplified in the recent ELMo paper, is feature-based training. In this arroyo, a pre-trained neural network produces word embeddings which are so used every bit features in NLP models.

How BERT works

BERT makes utilize of Transformer, an attending mechanism that learns contextual relations betwixt words (or sub-words) in a text. In its vanilla class, Transformer includes ii divide mechanisms — an encoder that reads the text input and a decoder that produces a prediction for the task. Since BERT's goal is to generate a language model, only the encoder mechanism is necessary. The detailed workings of Transformer are described in a paper by Google.

As opposed to directional models, which read the text input sequentially (left-to-right or right-to-left), the Transformer encoder reads the entire sequence of words at once. Therefore it is considered bidirectional, though it would be more authentic to say that it'southward non-directional. This characteristic allows the model to learn the context of a word based on all of its environs (left and right of the discussion).

The chart below is a high-level clarification of the Transformer encoder. The input is a sequence of tokens, which are first embedded into vectors and so processed in the neural network. The output is a sequence of vectors of size H, in which each vector corresponds to an input token with the aforementioned index.

When grooming language models, there is a challenge of defining a prediction goal. Many models predict the next word in a sequence (e.one thousand. "The child came domicile from ___"), a directional approach which inherently limits context learning. To overcome this challenge, BERT uses two preparation strategies:

Masked LM (MLM)

Before feeding word sequences into BERT, 15% of the words in each sequence are replaced with a [MASK] token. The model and so attempts to predict the original value of the masked words, based on the context provided by the other, non-masked, words in the sequence. In technical terms, the prediction of the output words requires:

- Adding a nomenclature layer on top of the encoder output.

- Multiplying the output vectors by the embedding matrix, transforming them into the vocabulary dimension.

- Calculating the probability of each word in the vocabulary with softmax.

The BERT loss function takes into consideration only the prediction of the masked values and ignores the prediction of the non-masked words. As a consequence, the model converges slower than directional models, a characteristic which is showtime past its increased context awareness (see Takeaways #three).

Notation: In practice, the BERT implementation is slightly more elaborate and doesn't supersede all of the xv% masked words. See Appendix A for additional information.

Adjacent Sentence Prediction (NSP)

In the BERT grooming procedure, the model receives pairs of sentences as input and learns to predict if the 2d judgement in the pair is the subsequent sentence in the original certificate. During training, fifty% of the inputs are a pair in which the second sentence is the subsequent sentence in the original certificate, while in the other 50% a random sentence from the corpus is chosen as the second sentence. The assumption is that the random sentence volition be disconnected from the first sentence.

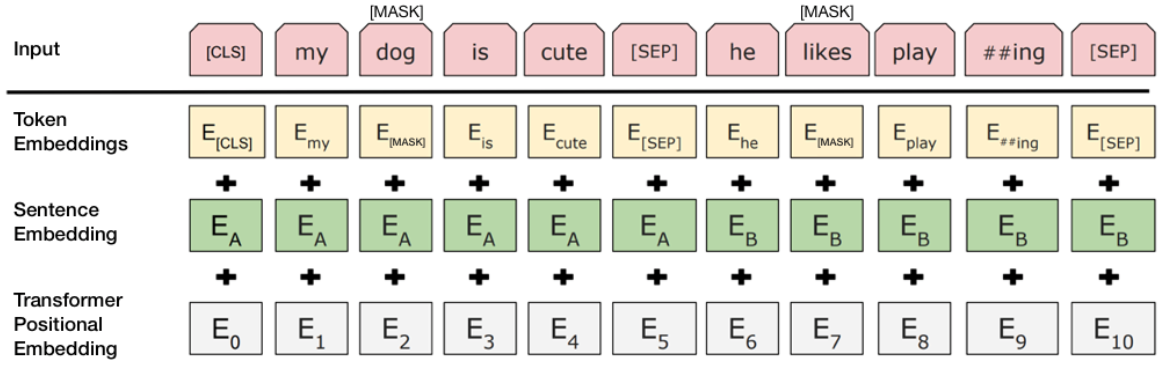

To help the model distinguish betwixt the two sentences in preparation, the input is processed in the following way before entering the model:

- A [CLS] token is inserted at the outset of the first sentence and a [SEP] token is inserted at the finish of each sentence.

- A sentence embedding indicating Sentence A or Judgement B is added to each token. Sentence embeddings are similar in concept to token embeddings with a vocabulary of 2.

- A positional embedding is added to each token to indicate its position in the sequence. The concept and implementation of positional embedding are presented in the Transformer paper.

To predict if the second sentence is indeed connected to the first, the following steps are performed:

- The unabridged input sequence goes through the Transformer model.

- The output of the [CLS] token is transformed into a 2×1 shaped vector, using a simple classification layer (learned matrices of weights and biases).

- Calculating the probability of IsNextSequence with softmax.

When preparation the BERT model, Masked LM and Next Sentence Prediction are trained together, with the goal of minimizing the combined loss part of the two strategies.

How to use BERT (Fine-tuning)

Using BERT for a specific job is relatively straightforward:

BERT tin exist used for a wide variety of linguistic communication tasks, while simply adding a small layer to the core model:

- Classification tasks such as sentiment analysis are done similarly to Side by side Sentence classification, past adding a classification layer on top of the Transformer output for the [CLS] token.

- In Question Answering tasks (e.grand. Squad v1.one), the software receives a question regarding a text sequence and is required to mark the answer in the sequence. Using BERT, a Q&A model can be trained by learning two extra vectors that marking the start and the end of the reply.

- In Named Entity Recognition (NER), the software receives a text sequence and is required to marking the various types of entities (Person, System, Date, etc) that appear in the text. Using BERT, a NER model tin exist trained by feeding the output vector of each token into a classification layer that predicts the NER characterization.

In the fine-tuning training, nearly hyper-parameters stay the aforementioned as in BERT grooming, and the paper gives specific guidance (Department 3.5) on the hyper-parameters that require tuning. The BERT team has used this technique to accomplish land-of-the-fine art results on a wide variety of challenging natural language tasks, detailed in Section 4 of the paper.

Takeaways

- Model size matters, fifty-fifty at huge scale. BERT_large, with 345 1000000 parameters, is the largest model of its kind. It is demonstrably superior on pocket-sized-scale tasks to BERT_base, which uses the same architecture with "but" 110 1000000 parameters.

- With enough grooming data, more than training steps == higher accuracy. For example, on the MNLI task, the BERT_base accurateness improves by 1.0% when trained on 1M steps (128,000 words batch size) compared to 500K steps with the same batch size.

- BERT'southward bidirectional approach (MLM) converges slower than left-to-right approaches (because only 15% of words are predicted in each batch) simply bidirectional preparation all the same outperforms left-to-right training afterward a pocket-sized number of pre-training steps.

Compute considerations (preparation and applying)

Conclusion

BERT is undoubtedly a breakthrough in the use of Auto Learning for Tongue Processing. The fact that information technology's outgoing and allows fast fine-tuning will likely permit a wide range of practical applications in the futurity. In this summary, we attempted to describe the master ideas of the paper while not drowning in excessive technical details. For those wishing for a deeper dive, we highly recommend reading the total commodity and ancillary articles referenced in information technology. Another useful reference is the BERT source code and models, which cover 103 languages and were generously released as open source by the research team.

Appendix A — Word Masking

Training the language model in BERT is done past predicting 15% of the tokens in the input, that were randomly picked. These tokens are pre-processed as follows — lxxx% are replaced with a "[MASK]" token, 10% with a random word, and ten% use the original word. The intuition that led the authors to option this arroyo is equally follows (Thanks to Jacob Devlin from Google for the insight):

- If we used [MASK] 100% of the time the model wouldn't necessarily produce skillful token representations for non-masked words. The non-masked tokens were all the same used for context, but the model was optimized for predicting masked words.

- If we used [MASK] 90% of the time and random words 10% of the time, this would teach the model that the observed discussion is never right.

- If we used [MASK] 90% of the time and kept the same give-and-take x% of the time, and so the model could just trivially copy the non-contextual embedding.

No ablation was done on the ratios of this approach, and information technology may have worked better with different ratios. In addition, the model performance wasn't tested with only masking 100% of the selected tokens.

For more than summaries on the contempo Machine Learning research, cheque out Lyrn.AI .

Source: https://towardsdatascience.com/bert-explained-state-of-the-art-language-model-for-nlp-f8b21a9b6270

0 Response to "Bert Is a State of the Art Sentence Embedding Model Recently Released by Google"

Post a Comment